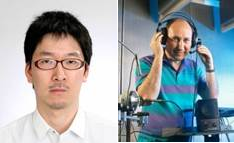

Prof. Sharon Gannot, Bar-Ilan Universität, Ramat Gan, Israel, und Prof. Shinji Watanabe, Johns Hopkins Universität, Baltimore, USA, besuchen am Montag, den 8.10., das Fachgebiet Nachrichtentechnik der Universität Paderborn.

Raumänderung P1.5.08.2

Prof. Gannot (<link https: www.eng.biu.ac.il gannot>www.eng.biu.ac.il/gannot/) ist eine sehr bekannte Persönlichkeit auf dem Gebiet der Audiosignalverarbeitung und hat viele wichtige Beiträge zur mehrkanaligen akustischen Signalverarbeitung (akustisches Beamforming) geleistet.

Prof. Watanabe (<link https: engineering.jhu.edu ece faculty shinji-watanabe>engineering.jhu.edu/ece/faculty/shinji-watanabe/) wiederum ist ein bekannter Experte für maschinelle Lernverfahren für die Sprachverarbeitung, insbesondere die automatische Spracherkennung.

In ihren Vorträgen an der Universität Paderborn werden sie jeweils aus ganz unterschiedlichen Blickwinkeln auf aktuelle Entwicklungen im Bereich der Sprach- und Audiosignalverarbeitung schauen, wie beispielsweise neue Signalverarbeitungsmethoden zur robusten Sprecherlokalisation und -verfolgung und neuronale Ende-zu-Ende Optimierung von Spracherkennungssystemen. Herzliche Einladung, nicht nur zu diesen Vorträgen sondern auch zu Gesprächen mit den Gästen, sowohl vor als auch nach den Vorträgen!

- Speech Dereverberation using EM Algorithm and Kalman Filtering

Sharon Gannot, Bar-Ilan University, Israel

(joint work with Boaz Schwartz and Emanuël A.P. Habets)

Reverberation is a typical acoustic phenomenon that is attributed to multitude of reflections from the walls, ceiling, and objects in enclosures. Reverberation is known to deteriorate the accuracy of automatic speech recognition systems as well as the quality of the speech signals. In severe cases, it might also hamper the intelligibility of the speech signals. Dereverberation algorithms aim at the reduction of the reverberation and, and as a result, at emphasizing the anechoic speech signal.

A non-Bayesian, Maximum-Likelihood (ML) approach for single speaker dereverberation using multiple microphones, is taken in this work. We first define a statistical model for the speech signal and for the associated acoustic impulse responses, and speech and noise power spectral densities. The estimate-maximize (EM) approach is then employed to infer the ML estimate of the deterministic parameters. It is shown that the clean speech is estimated in the E-step using a Kalman smoother, and the acoustic parameters are updated in the M-step. For online applications and dynamic scenarios, i.e. when the speaker and/or the microphones are moving, we derive a recursive (REM) algorithm which uses the Kalman filter rather than the Kalman smoother and an online update the parameters, by only using the current observed data.

Two extensions of this approach were developed as well. The first extension is a segmental algorithm, taking an intermediate batch-recursion approach, where iterations are performed over short segments and smoothness between the estimated parameters is preserved. The latency of the segmental algorithm can hence be controlled by setting the segment length, while the accuracy of the estimates can be iteratively improved. The second extension is a binaural algorithm mainly applicable to hearing aids. The binaural algorithm trades off between the reduction of reverberation and the preservation of the spatial perception of the user.

An extensive simulation study, as well as real recordings of moving speakers in our acoustic laboratory, demonstrate the performance of the presented algorithms.

Sharon Gannot

His research interests include statistical signal processing and machine learning algorithms (including manifold learning and deep learning) with applications to single- and multi-microphone speech processing. Specifically, distributed algorithms for ad hoc microphone arrays, speech enhancement, noise reduction and speaker separation, dereverberation, single microphone speech enhancement, speaker localization and tracking.

Dr. Gannot was an Associate Editor for the EURASIP Journal of Advances in Signal Processing during 2003–2012, and an Editor for several special issues on multi-microphone speech processing of the same journal. He was a Guest Editor for the Elsevier Speech Communication and Signal Processing journals. He is currently the Lead Guest Editor of a special issue on speaker localization for the IEEE Journal of Selected Topics in Signal processing. He was an Associate Editor for the IEEE Transactions on Audio, Speech, and Language Processing during 2009–2013, and the Area Chair for the same journal during 2013–2017. He is currently a Moderator for arXiv in the field of audio and speech processing. He is also a Reviewer for many IEEE journals and conferences. Since January 2010, he has been a Member of the Audio and Acoustic Signal Processing technical committee of the IEEE and serves, since January 2017, as the Committee Chair. Since 2005, he has also been a Member of the technical and steering committee of the International Workshop on Acoustic Signal Enhancement (IWAENC) and was the General Co-Chair of the IWAENC held in Tel-Aviv, Israel in August 2010. He was the General Co-Chair of the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics in October 2013. He was selected (with colleagues) to present tutorial sessions at ICASSP 2012, EUSIPCO 2012, ICASSP 2013, and EUSIPCO 2013 and was a keynote speaker for IWAENC 2012 and LVA/ICA 2017. He was the recipient of the Bar-Ilan University Outstanding Lecturer Award in 2010 and 2014 and the Rector Innovation in Research Award in 2018. He is also a co-recipient of ten best paper awards.

- Multichannel End-to-End Speech Recognition

The field of speech recognition is in the midst of a paradigm shift: end-to-end neural networks are challenging the dominance of hidden Markov models as a core technology. Using an attention mechanism in a recurrent encoder-decoder architecture solves the dynamic time alignment problem, allowing joint end-to-end training of the acoustic and language modeling components. In this talk we extend the end-to-end framework to encompass microphone array signal processing for noise suppression and speech enhancement within the acoustic encoding network. This allows the beamforming components to be optimized jointly within the recognition architecture to improve the end-to-end speech recognition objective. Experiments on the noisy speech benchmarks (CHiME-4 and AMI) show that our multichannel end-to-end system outperformed the attention-based baseline with input from a conventional adaptive beamformer. In addition, the talk covers further analysis of whether the integrated end-to-end architecture successfully obtains adequate speech enhancement ability that is superior to that of a conventional alternative (a delay-and-sum beamformer) by observing two signal-level measures: the signal-to-distortion ratio and the perceptual evaluation of speech quality. The talk also introduces a multi-path adaptation scheme for end-to-end multichannel ASR, which combines the unprocessed noisy speech features with a speech-enhanced pathway to improve upon previous end-to-end ASR approaches.

Shinji Watanabe